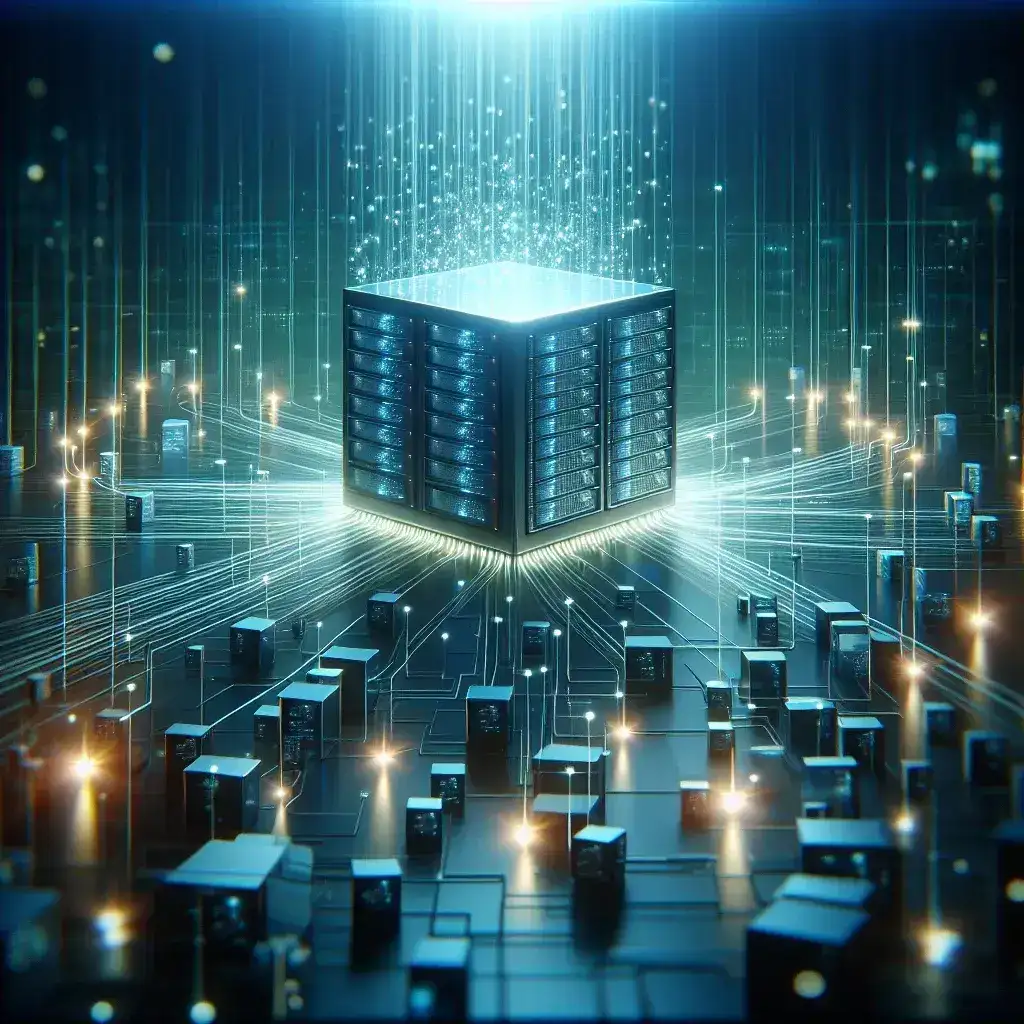

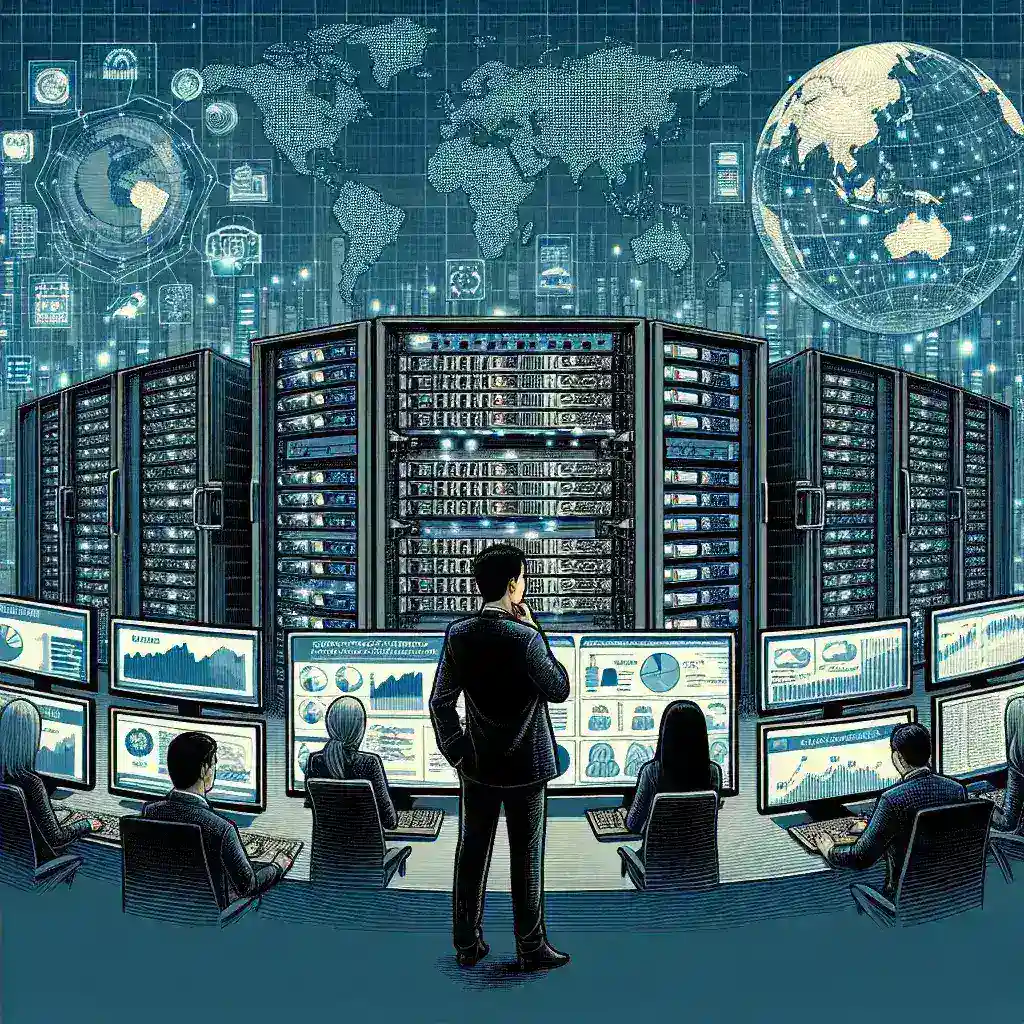

Os melhores serviços de cópia de segurança na nuvem para os proprietários de pequenas empresas

Na atual era digital, a proteção de dados é essencial para os proprietários de pequenas empresas. Quer se trate de informações de clientes, registos financeiros ou dados proprietários, a perda de ficheiros críticos pode ser prejudicial para qualquer empresa. Os serviços de cópia de segurança na nuvem oferecem uma solução eficaz, proporcionando segurança, escalabilidade e acessibilidade. Eis uma análise de alguns dos melhores serviços de cópia de segurança na nuvem adaptados às pequenas empresas.